Complex AI workloads, such as large-scale medical and biological image analysis, are pushing even the largest GPU clusters to their limits. At a supercomputing facility in Poland, researchers are testing whether quantum processors could help support such demanding workloads.

The project brings together UK-based ORCA Computing and the Poznań Supercomputing and Networking Center, where ORCA’s photonic quantum systems have been integrated directly into a production high-performance computing environment and embedded into machine-learning workflows rather than operated as standalone experimental machines.

The approach follows a familiar pattern in data center evolution: CPUs handle general computing, GPUs provide massive parallelism for AI training, and now quantum processors are joining them for problems classical systems struggle with. In this hybrid model, quantum processors are used to explore complex probability spaces, while GPUs handle the large-scale numerical processing required for AI training.

According to James Fletcher, head of solutions architecture at ORCA Computing, this mirrors how quantum technology is likely to be adopted in practice, using an iterative feedback loop between quantum and classical systems.

“Hybrid algorithms use a variational approach where you run an iteration, measure the outcome and refine it using classical and quantum computing,” Fletcher said. He added that near-term quantum value depends on combining the systems, particularly for machine-learning and optimization workloads.

Where AI fits into quantum and vice versa

Quantum and classical systems repeatedly exchange results, refining the solution step by step. Quantum processors generate outputs that are difficult for classical systems to produce at scale, which are then fed into conventional AI training pipelines running on GPUs.

At Poznań, early demonstrations rely on standard machine-learning benchmarks. The team plans to extend the framework toward more demanding real-world imaging workloads, where hybrid systems could offer advantages in analyzing extremely complex data.

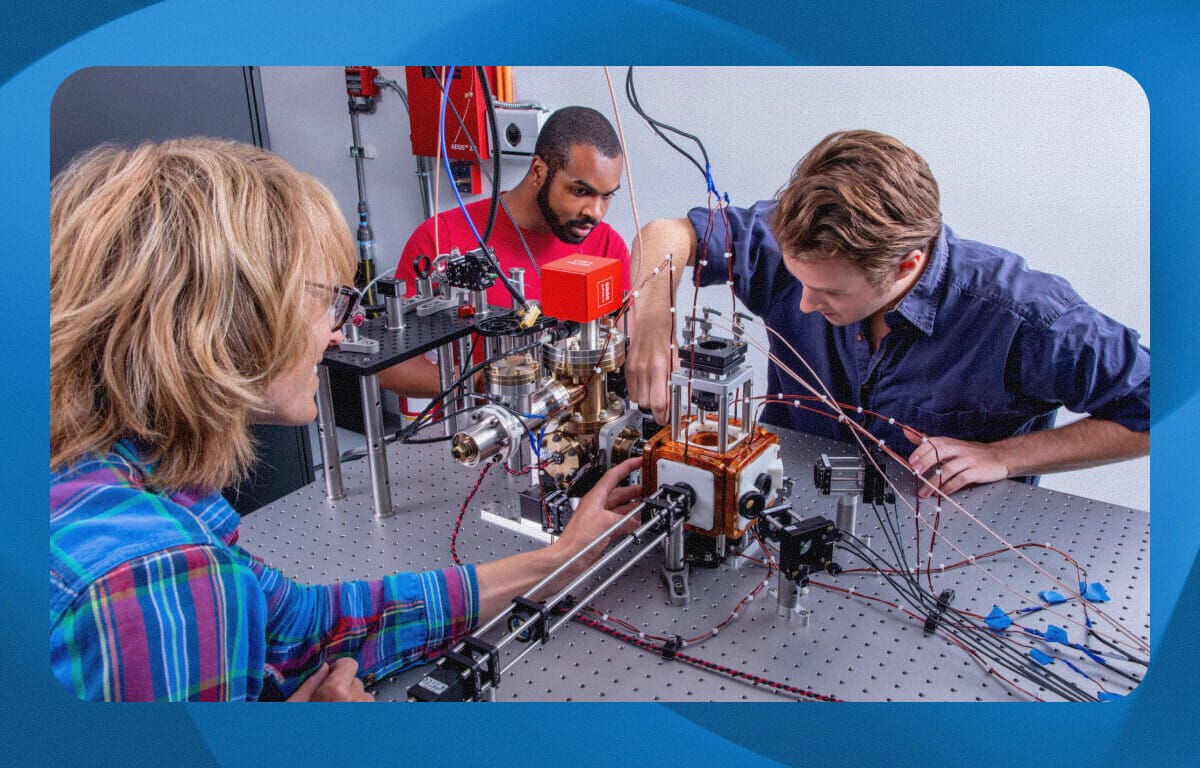

Credit: QuEra

This division of labor helps explain why quantum computers are entering data centers as partners to AI infrastructure rather than as replacements for it. “Quantum computing is good at evaluating many different alternatives and reaching high levels of entanglement,” said Yuval Boger, chief commercial officer at QuEra. “But it’s very bad at math and processing large amounts of data. Any serious application requires both classical and quantum computing.”

Instead of pursuing isolated demonstrations of quantum advantage, vendors and researchers are focusing on hybrid architectures that integrate quantum processors into existing GPU-based environments.

From experiments to real workloads

That hybrid approach is emerging as quantum hardware becomes powerful enough to support real workflows but remains too fragile to operate independently. Today’s noisy intermediate-scale quantum (NISQ) systems can perform useful calculations, but their error rates mean they depend on classical and AI-based techniques for error correction, control and validation.

Integration requires fast, low-latency links between quantum hardware and GPU-based infrastructure. Juha Vartiainen, co-founder and chief of global affairs at IQM, pointed to recent work with NVIDIA. “It allows part of the quantum error correction to be done in the racks of GPUs, using AI and other methodologies.”

By processing quantum error correction and control workloads on GPUs and feeding the results back in real time, hybrid architectures aim to make today’s error-prone machines usable within conventional high-performance computing (HPC) and cloud workflows.

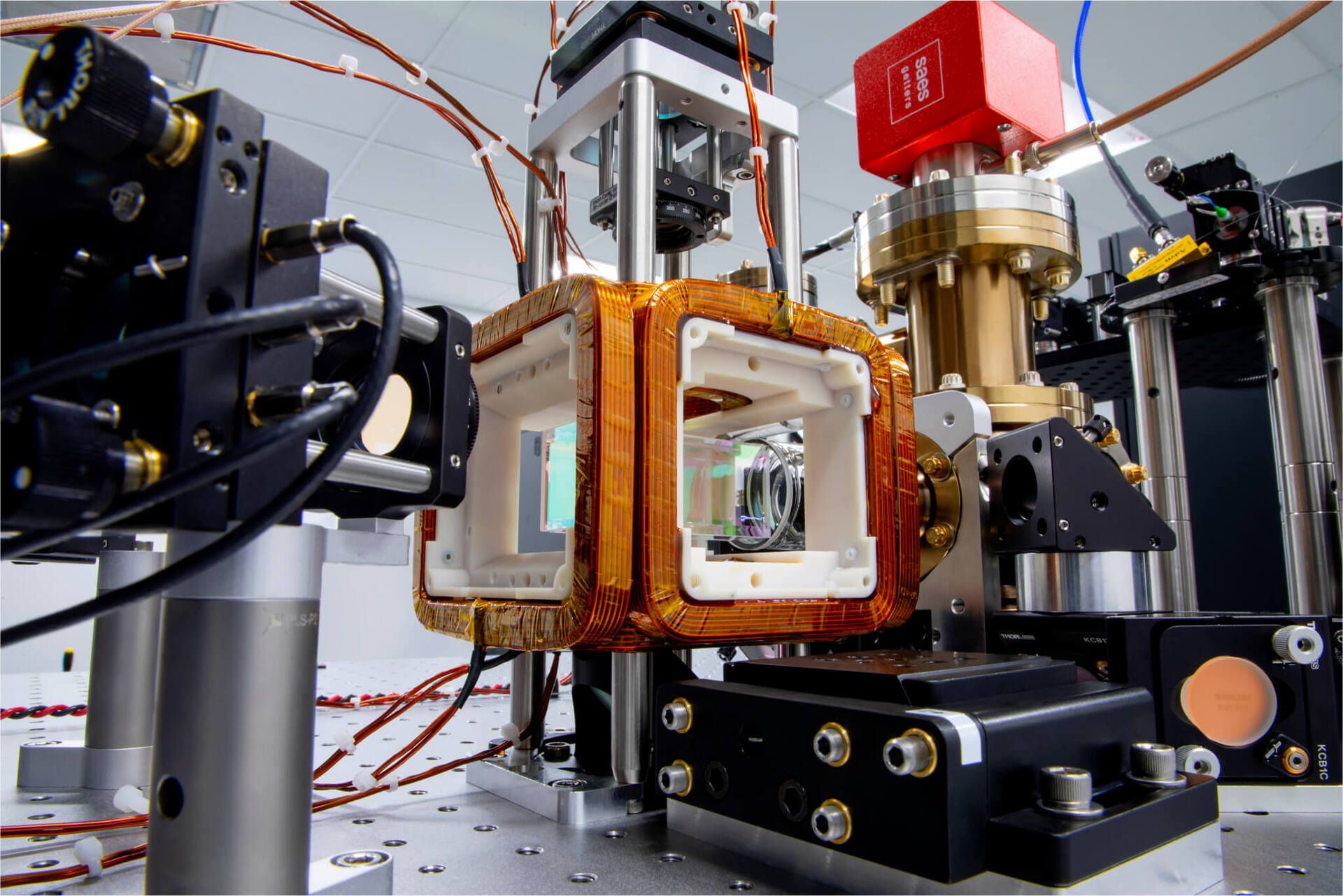

Hardware made by IQM. Credit: IQM

Florian Preis, vice president of quantum solutions at Quantum Brilliance, said the emphasis is shifting away from scientific proof-of-concept toward engineering challenges: orchestration, security and reliability. “The software stack for hybrid quantum-HPC integration is still fragmented,” he said, noting that vendors often develop their own scheduling and control tools for the same facilities, making portability harder than necessary.

Together, these efforts point to a future in which quantum processors are treated less as exotic research instruments and more as specialized accelerators embedded within conventional enterprise infrastructure, working alongside CPUs and GPUs.

How quantum and AI strengthen each other

In hybrid systems, classical machine-learning techniques are increasingly used to stabilize quantum hardware, while quantum processors are beginning to contribute specialized data and optimization methods to AI workflows.

Boger sees AI playing a growing role in making quantum systems more reliable. “We see AI helping do better error correction and design better computers, and quantum computers generating data sets that can be used to train better AI models,” he said.

That feedback loop is already visible in hybrid research projects. Fletcher pointed to experiments where quantum processors are embedded directly into machine-learning workflows, using quantum circuits to generate specialized outputs that are then processed and refined by classical AI running on GPUs.

Vartiainen said quantum-generated data could be particularly valuable for training AI systems. “A quantum computer can make a good kind of entropy from the right kind of distributions,” he said. “That can be important for training AI models, especially when you want to generate rare input data that accelerates neural network training.”

AI could also lower the barrier to working with quantum systems. Programming quantum computers remains a specialist skill with a limited developer base, and Vartiainen argued that large language models could act as an interface. “Programming a quantum computer requires some special skills,” he said. “There are not many people in the world who can do it. Large language models can work as an interface for quantum computers.”

These developments suggest that progress in quantum computing will be driven as much by advances in AI software as by improvements in qubit hardware. Hybrid systems are becoming a two-way exchange, with classical AI techniques helping quantum machines function more effectively and quantum processors providing new tools for training and optimizing AI models.

The path to production

Hybrid quantum systems are moving beyond laboratory demonstrations, driven by customer demand. Boger said demand is shifting toward making quantum hardware usable inside existing enterprise and HPC workflows rather than proving isolated scientific milestones.

Boger pointed to a recent demonstration with Dell at the Supercomputing conference, where quantum systems were connected directly to conventional orchestration tools. “Dell’s customers are asking about quantum and want it integrated into their existing orchestrator,” he said. “The next generation of supercomputers is likely to have a quantum connector or component built in.”

/

/

The shift places engineering challenges ahead of theoretical ones. Fully fault-tolerant quantum computers remain years away, but the industry’s focus has shifted toward making today’s hybrid systems reliable enough to operate inside real enterprise and HPC environments.

Quantum hardware must fit into data centers in the same way as CPUs and GPUs do today, said Preis. “Quantum hardware should ultimately sit wherever classical hardware does today, embedded directly within data centers or research facilities,” he added. “That enables tighter integration with CPUs and GPUs, simplifies hybrid workflow design and reduces operational friction.”

The path to production is likely to be shaped by incremental engineering progress. For now, hybrid systems are being validated through benchmarks rather than real workloads, but they point toward a future in which quantum processors are integrated directly into enterprise data centers alongside classical and AI systems — as partners to AI infrastructure, not replacements for it.